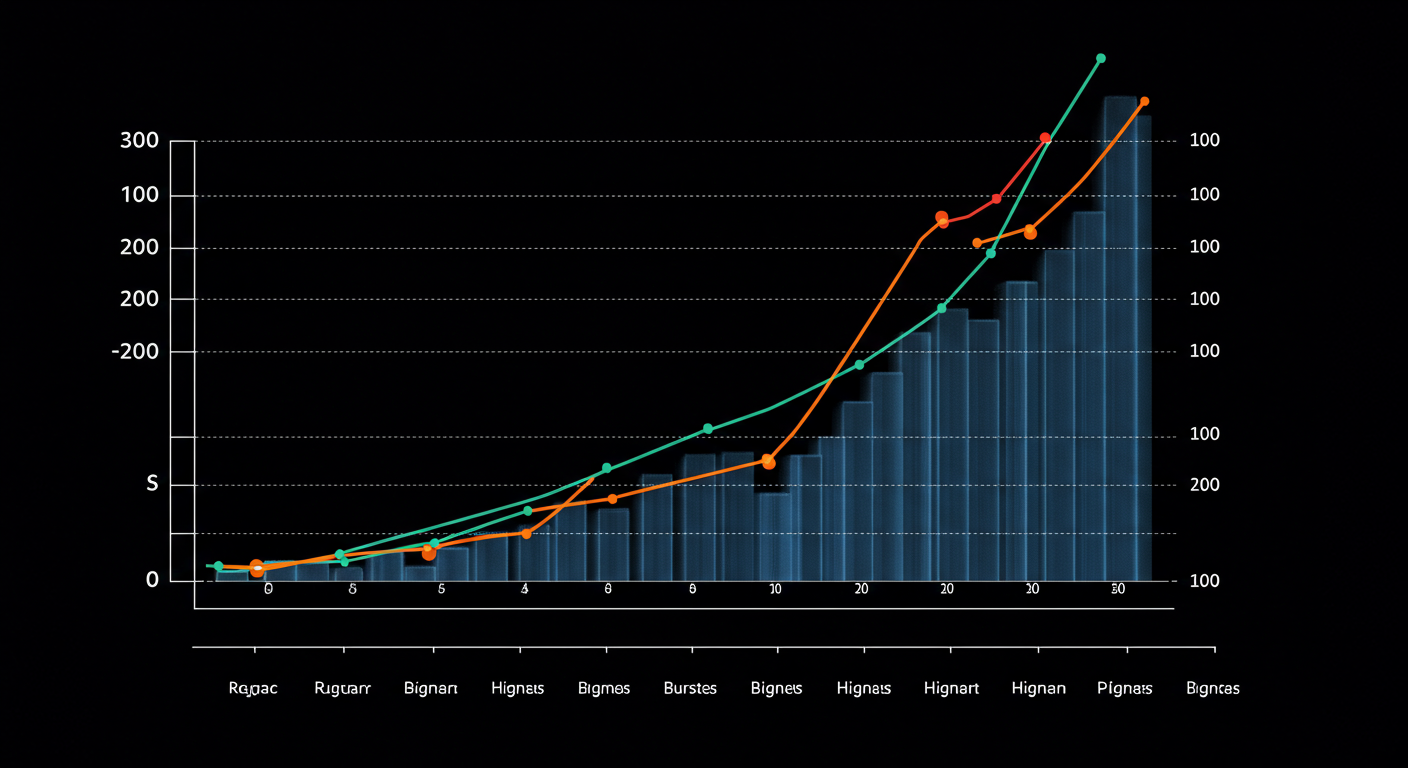

Azure VM Series Misalignment Inflates Spend

Our Azure bill jumped again, and we couldn't pinpoint why. It turns out, perfectly good workloads were quietly burning through an extra $3,200 every single month.

It started subtly. A slight uptick in our monthly Azure bill, then another, then suddenly finance was asking questions about a recurring $3,200 discrepancy. My initial thought? "Someone left a development environment running." But a quick check revealed no obvious culprits.

As a platform engineer, my job is to ensure our cloud infrastructure is both performant and cost-efficient. So, when a chunk of our budget simply vanished each month without a clear cause, it felt like a personal challenge. The deeper I dug, the more I realized the problem wasn't a single rogue resource, but a systemic misalignment in how we provisioned Azure Virtual Machines.

The culprit: a significant number of our workloads were running on Azure's D-series VMs – powerful, general-purpose instances designed for consistent, compute-intensive tasks. The issue? Many of these workloads exhibited highly burstable patterns, meaning they required significant compute for short periods, but were largely idle the rest of the time. They were textbook candidates for the more cost-effective B-series, Azure's burstable VMs, which offer a baseline performance and can burst to full CPU when needed, at a fraction of the D-series cost.

Chasing Ghosts: Our Manual Audit Nightmare

My first instinct was to go old school: spreadsheets, Azure Cost Management reports, and a lot of caffeine. The plan was simple: export all VM costs, cross-reference with utilization metrics, and manually identify oversized instances. What I quickly discovered was that this 'simple' plan was anything but.

- Azure Portal Fatigue: Navigating through hundreds of VMs, checking CPU, memory, and network I/O metrics in the Azure portal was mind-numbingly slow.

- Correlation Chaos: Relating a specific VM's usage patterns to its actual workload (and its owner!) was like piecing together a detective novel without any clues. Tags were inconsistent, documentation was sparse, and "who owns this?" Slack messages often went unanswered.

- Dynamic Environments: By the time I identified a potential candidate for rightsizing, the workload might have changed, scaled, or even been decommissioned. It felt like I was constantly chasing a moving target.

We tried implementing stricter provisioning policies, but it was like locking the barn door after the horses had bolted. The existing sprawl of D-series VMs on burstable workloads continued to silently drain our budget. It was reactive, unsustainable, and incredibly frustrating.

The Burstable Breakthrough: Pattern Recognition

The real breakthrough came when I shifted focus from individual VMs to workload patterns. Instead of just looking at average utilization, I started visualizing CPU and memory over longer periods – weeks, even months. What emerged was a stark contrast:

- Consistently High Utilization: A few D-series VMs showed steady, high CPU and memory usage, justifying their series.

- The Burst-and-Idle Cycle: A much larger group of D-series VMs exhibited short, intense bursts of activity, followed by hours or even days of near-idle state. These were the primary culprits. They were paying for dedicated, high-performance CPUs that were largely sitting dormant.

It was an "Aha!" moment. These weren't misconfigured D-series instances; they were perfect candidates for Azure B-series. The B-series VMs earn credits when idle and use them to burst when demand spikes, offering a phenomenal cost-performance ratio for intermittent workloads. The misalignment wasn't a glitch; it was a fundamental mismatch between workload characteristics and instance types chosen, often due to default selections or a lack of awareness during provisioning.

EazyOps: Uncovering Waste, Automating Savings

This is where EazyOps came into play. Our existing monitoring could show us the data, but it couldn't tell us what to *do* with it, or *how* to do it at scale without significant manual effort and risk. EazyOps offered an intelligent, automated solution that transformed our reactive struggle into proactive optimization.

EazyOps began by seamlessly integrating with our Azure environment, ingesting performance metrics across all our VMs. Its AI-powered engine then went to work, automatically analyzing historical CPU, memory, disk I/O, and network patterns for every single instance. Unlike our manual process, EazyOps could instantly identify:

- VMs with consistent low utilization on expensive D-series instances.

- Workloads exhibiting the characteristic "bursty" pattern, ideal for B-series.

- Optimal B-series instance sizes to match specific burst requirements, preventing under-provisioning.

But EazyOps didn't stop at recommendations. Its true power lay in its automated migration capabilities. After validating the identified candidates, EazyOps could orchestrate the entire migration process to the recommended burstable instances. This involved safely shutting down the VM, resizing it, and restarting it, all with minimal intervention and built-in safeguards to ensure workload continuity.

The Payoff: $3,200 Saved, 60% Cost Cut

The results were immediate and impactful. Within the first month of leveraging EazyOps, we saw a dramatic shift in our Azure consumption patterns:

Direct Cost Savings:

- Eliminated $3,200/month in excess spend from misaligned VMs.

- Achieved a 60% reduction in costs for the targeted workloads.

- Identified and rightsized dozens of VMs across various subscriptions.

Operational Efficiency:

- Reduced manual audit time from days to minutes.

- Engineers were freed up to focus on innovation, not cost hunting.

- Increased confidence in our Azure spending, knowing we weren't over-provisioning.

Beyond the quantifiable savings, EazyOps provided an invaluable layer of visibility and control that we simply couldn't achieve manually. The era of silent cloud drain was over.

Crucial Lessons from the Cloud Cost Trenches

Our journey through Azure VM series misalignment taught us some critical lessons that are applicable to any cloud environment:

- Defaults Are Dangerous: Never assume the default VM or resource type is the most cost-effective or even the most appropriate. Always evaluate workload patterns against available instance types.

- Workload Pattern is King: It's not just about peak CPU. It's about *how* that CPU is utilized over time. Burstable instances are a game-changer for intermittent workloads, but often overlooked.

- Automation is Non-Negotiable: Manual cloud cost optimization simply does not scale. With dynamic, ever-changing cloud environments, automated analysis and remediation are essential to stay efficient.

- Proactive, Not Reactive: The goal is to prevent misalignment before it happens, or identify it the moment it appears, rather than discovering a bloated bill weeks later.

The Future of Cloud Cost Efficiency

Our experience with Azure VM series misalignment is just one example of the complex challenges modern cloud environments present. As organizations scale and diversify their cloud usage, tools like EazyOps become indispensable. They move beyond basic monitoring to offer intelligent, actionable insights and automation that directly impact the bottom line.

Looking ahead, the goal isn't just to cut costs, but to optimize cloud spend for maximum business value. This means:

- Predictive Optimization: Using AI to forecast future workload patterns and suggest proactive instance changes.

- Continuous Rightsizing: Constantly adapting resource allocations as workloads evolve, ensuring ongoing efficiency.

- Cross-Cloud Intelligence: Providing a unified view and optimization strategy across multi-cloud environments.

For us, EazyOps transformed a frustrating, costly problem into a success story of efficiency and smarter cloud management. It's clear that in today's cloud landscape, an intelligent automation platform isn't a luxury; it's a necessity for anyone serious about controlling costs and maximizing their cloud investment.

About Shujat

Shujat is a Senior Backend Engineer at EazyOps, working at the intersection of performance engineering, cloud cost optimization, and AI infrastructure. He writes to share practical strategies for building efficient, intelligent systems.