When Security Theater Costs $50K: My Kubernetes Over-Isolation Horror Story

"We need complete isolation between teams."

Famous last words from our CISO during a quarterly security review. Six months later, I was explaining to leadership why our Kubernetes infrastructure costs had nearly doubled while our actual workload capacity remained roughly the same.

Spoiler alert: Perfect isolation isn't perfect—it's expensive.

The Security Blanket That Became a Straightjacket

It started innocently enough. We had three development teams sharing a Kubernetes cluster, and occasional resource conflicts were causing friction. Team A's memory-hungry batch jobs would starve Team B's web services, leading to those awkward Slack conversations nobody enjoys.

The solution seemed obvious: give everyone their own sandbox.

What we thought would happen:

- No more resource conflicts

- Clear ownership boundaries

- Enhanced security posture

- Happy teams

What actually happened:

- Infrastructure costs increased 180%

- Management overhead became a full-time job

- Resource utilization dropped to 30%

- Security didn't actually improve

The Great Cluster Multiplication

Our "solution" looked something like this:

Before:

- 1 shared cluster with namespace-based separation

- 3 worker nodes (m5.2xlarge)

- Single control plane

- Shared monitoring stack

- Monthly cost: ~$2,800

After:

- 6 dedicated clusters (dev, staging, prod for each team)

- 18 worker nodes (because each cluster needed minimum viable capacity)

- 6 control planes

- 6 monitoring stacks

- 6 ingress controllers

- 6 sets of operational tooling

- Monthly cost: ~$7,200

The math was brutal. We'd traded efficiency for the illusion of security.

The Dirty Truth About "Secure" Isolation

Here's what nobody talks about when they're selling you on micro-clusters:

Resource Waste at Scale

Each team's production workload needed maybe 60% of a single node during peak hours. But Kubernetes clusters don't run well on 0.6 of a node-you need whole nodes, plus buffer capacity, plus high availability.

Result? Each team was paying for 3 nodes to use 1.5 nodes worth of resources.

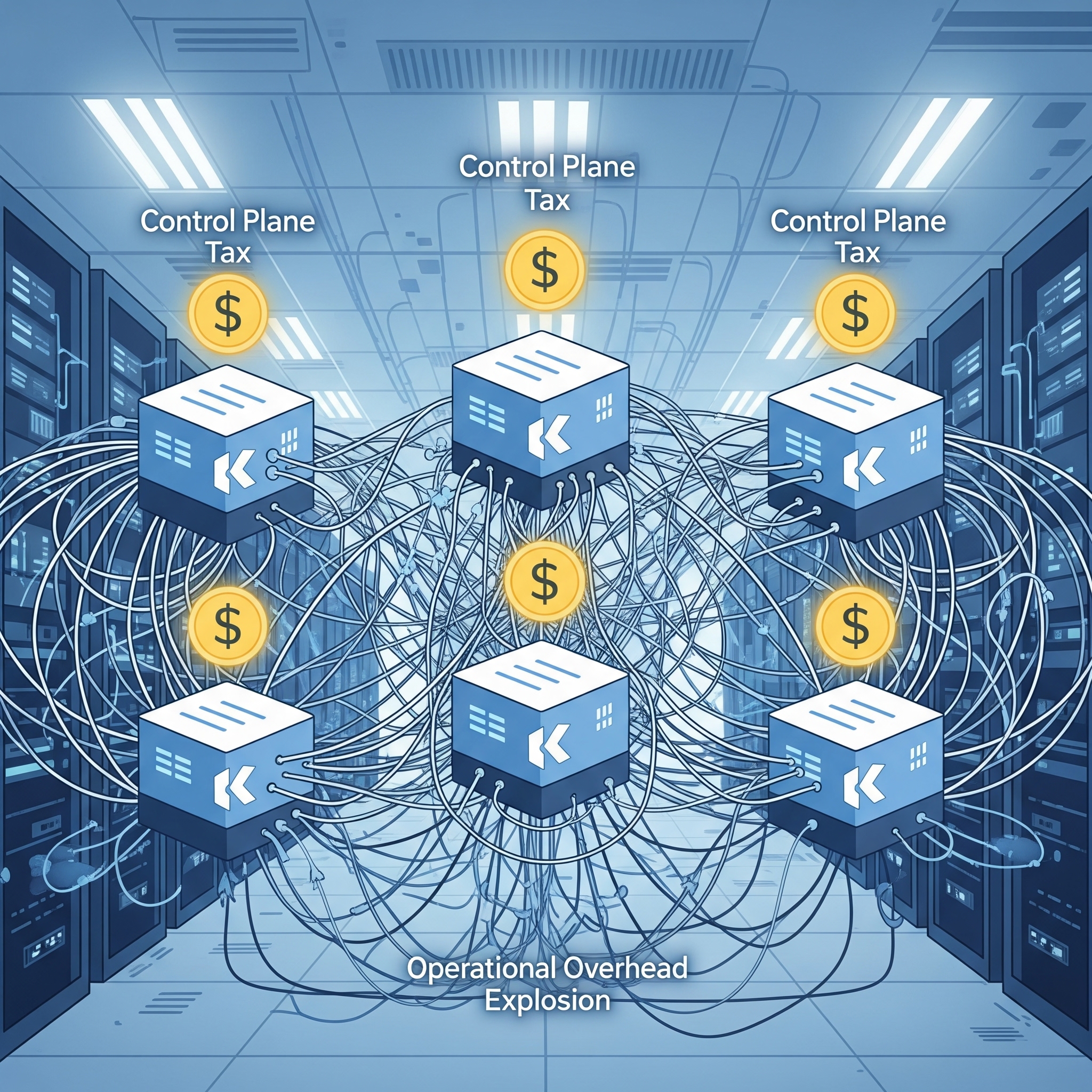

The Control Plane Tax

Every Kubernetes cluster needs a control plane. In our managed EKS setup, that's ~$73/month per cluster just for the privilege of having an API server. Multiply by 6 clusters, and you're paying $438/month for... what exactly? The ability to run kubectl commands in different windows?

Operational Overhead Explosion

Remember when you had one cluster to monitor, update, and troubleshoot? Now imagine doing that six times over. Every security patch, every Kubernetes version upgrade, every monitoring configuration change-multiply by six.

Our DevOps team went from managing infrastructure to being full-time cluster babysitters.

The Breaking Point: The Great Certificate Incident

The wake-up call came during a routine certificate renewal. What used to be a single operation now required coordinating across six clusters, each with slightly different configurations because—let's be honest-copy-paste doesn't scale perfectly.

Team C's staging environment went down for three hours because their certificate renewal script had a hard-coded cluster name. Team A's monitoring was broken for a week because we forgot to update their Prometheus configuration.

Meanwhile, an actual security audit revealed that our "ultra-secure" isolated clusters were all using the same IAM role patterns and had nearly identical network configurations. We'd multiplied our attack surface without improving our security posture.

Finding Our Way Back to Sanity

The solution wasn't to abandon multi-tenancy-it was to do it right.

Smart Isolation vs. Brute Force Isolation

Instead of "everyone gets their own cluster," we implemented layered isolation within shared infrastructure:

- Network-Level Separation: Calico network policies that enforce traffic rules between namespaces. Teams can't accidentally (or maliciously) interfere with each other's network traffic.

- Resource Boundaries: Resource quotas and limit ranges that prevent any single team from monopolizing cluster resources. No more batch jobs starving web services.

- RBAC Done Right: Granular role-based access controls that give teams full autonomy within their namespaces while preventing cluster-wide chaos.

- Pod Security Standards: Enforced security policies that prevent privilege escalation and container breakouts without requiring separate clusters.

The Results: Security AND Efficiency

Six months after our "re-consolidation":

Cost Impact:

- 53% reduction in infrastructure spend

- 40% improvement in resource utilization

- 75% reduction in operational overhead

Security Improvements:

- Centralized security policy enforcement

- Simplified certificate and secrets management

- Better audit logging and compliance reporting

- Faster security patch deployment

Developer Experience:

- Consistent tooling and monitoring across teams

- Simplified CI/CD pipelines

- Better cross-team collaboration capabilities

- Faster environment provisioning

Lessons from the Trenches

- Isolation isn't binary: You don't need separate clusters to achieve security boundaries. Kubernetes has sophisticated built-in isolation mechanisms-use them.

- Shared infrastructure scales better: The operational overhead of managing N clusters grows faster than linearly. At some point, you're spending more on operations than on actual compute.

- Security through complexity is an anti-pattern: More moving parts mean more failure modes and more attack vectors. Simple, well-configured systems are often more secure than complex, heavily isolated ones.

- Monitor your isolation tax: Track not just compute costs but operational overhead. If you're spending more time managing infrastructure than building features, something's wrong.

What's Next: Smart Multi-Tenancy

At EazyOps, we're seeing more teams struggle with this exact problem. The pendulum swung too far toward over-isolation, and now it's swinging back toward intelligent resource sharing.

The future isn't about choosing between security and efficiency—it's about achieving both through better tooling and smarter architectural decisions.

Modern Kubernetes multi-tenancy done right looks like:

- Shared control planes with strong tenant isolation

- Intelligent workload placement and resource allocation

- Automated policy enforcement and compliance monitoring

- Cost attribution and chargeback without infrastructure duplication

Because at the end of the day, the most secure system is one that's cost-effective enough to operate properly and simple enough to understand completely.

About Shujat

Shujat is a Senior Backend Engineer at EazyOps, working at the intersection of performance engineering, cloud cost optimization, and AI infrastructure. He writes to share practical strategies for building efficient, intelligent systems.